Karl Robinson

July 19, 2021

Karl is CEO and Co-Founder of Logicata – he’s an AWS Community Builder in the Cloud Operations category, and AWS Certified to Solutions Architect Professional level. Knowledgeable, informal, and approachable, Karl has founded, grown, and sold internet and cloud-hosting companies.

Cost transparency is one of the most common reasons why people hesitate when it comes to migrating projects to the public Cloud. At first glance, pricing for AWS can appear fairly complex. Concerns over data usage, storage usage, and the level of computing power that a customer actually gets for their money can confuse what would otherwise be a fairly straightforward decision.

But when those costs are broken down in easy to understand terms, everything is suddenly demystified. Pricing for AWS isn’t as complex as it might seem at first glance. There are even some handy calculators and budget trackers that can help with the cost projection process.

The decision to use public Cloud resources for a project then becomes an assessment of how efficiently the server and software runs on virtual machines (VMs), what kind of permanent storage is required, and whether there’s a cost saving to be had by using AWS over traditional server colocation.

The goal of this article is to create a somewhat foolproof way to estimate monthly pricing for AWS.

Cloud VS Tin

Assuming that the pain and expertise of on-site hosting is something that the reader is attempting to migrate away from, the first decision that needs to be made is whether it’s better to colocate in a data center, or make use of Cloud resources.

This is not an all-or-nothing decision. Hybrid solutions that incorporate both Cloud and cold hard tin are common these days. However, for each major aspect of the project, an analysis needs to be made to determine where a particular function should take place.

One of the big considerations is licensing costs. Often, public Cloud can leverage massive purchasing power across millions of clients to get cheap licenses for various operating systems and software suites. A colocated server would be stuck with whatever license costs the client themself can negotiate.

Dynamic Expansion

Another consideration is dynamic expansion. Switching to a bigger, more powerful server in the Cloud is trivial, and can be done simply by selecting a new plan. Expanding a rack mount means buying more hardware, and possibly renting more space on the rack itself.

On the other hand, government or industry standard compliance is often easier on real hardware. The ability to audit and show logs and version numbers all the way down to the BIOS level is an advantage of real hardware. So much in the Cloud is virtualized or obfuscated, that it can displease certain regulators.

Similarly, security can be hammered down far more tightly on a physical machine than on a virtual one. There is no threat of ‘leakage’ across a virtual demarcation, no threat of a denial of service (other than purely bandwidth based ones) on a neighboring host interfering with operations.

A/B Testing

After taking all of that into account, it is time to perform A / B testing.

An A / B test simply shows how efficiently a physical setup of a software suite runs VS a virtual one. With the exact same workload on both systems, calculations can be made as to the efficiency of having certain operations happen on one or the other.

This is normally done with the aid of a performance tester, using performance software such as New Relic, Soasta Cloudtest, and the like. For smaller scale tests, a DevOps engineer with OS level monitoring tools might be enough.

After examining the performance testing data and relative costs, it should be a simple decision…

…assuming calculating the Cloud costs is also a simple task.

Determining Pricing for AWS – The Roles

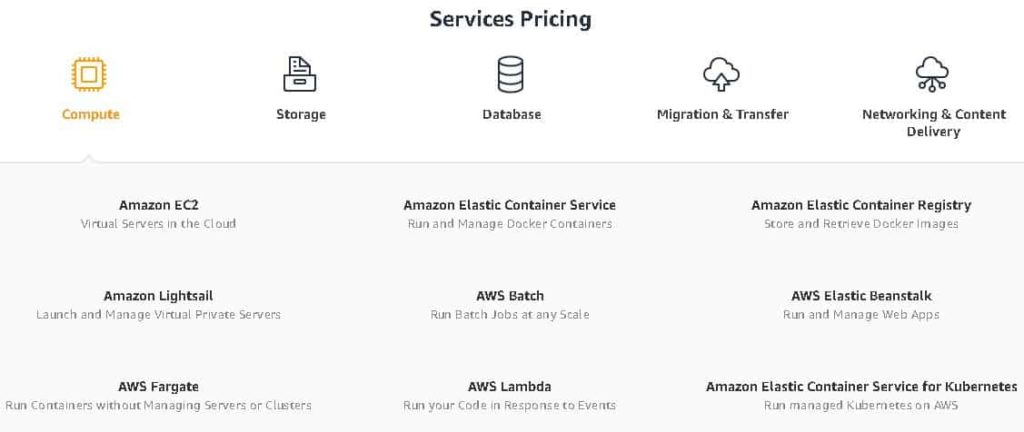

The type of AWS service required is largely determined ahead of time. These days, AWS has nine main compute service offerings that can be further condensed into four roles.

Amazon EC2 and Lightsail: Virtual servers, either publicly accessible or private.

Amazon Elastic Container Service, Registry, Elastic Kubernetes Service, or Fargate: Container instances, images, and container related services.

AWS Lambda: A listener that runs code in response to certain events and conditions.

AWS Batch and Beanstalk: Running web apps and batch jobs.

One or more of these roles and services will fit the parameters of just about any project. Building an entire environment from scratch and then opening it up for testing is generally an EC2 role. Unless it happens to be containerized, then it’s either ECS or EKS.

Determining Pricing for AWS – Preemptable or On-Demand

One of the reasons to look into containerization is to take advantage of preemptible VMs without losing service integrity. For example, Kubernetes clusters display ‘self healing’ properties. They can restart nodes and their workloads automatically. As long as the particular application being run doesn’t require true 24/7 uptime, preemptible instances can save up to 90% on normal instances. That could mean thousands, or tens of thousands saved for every node depending on the scale of what is being attempted.

EC2’s version of preemptible VMs are called ‘Spot’ instances. If the application stack can be designed to be self healing and self deploying (and it doesn’t require round the clock uptime), Spot instances will offer similar savings to Kubernetes preemptible VMs.

Amazon Pricing Calculator

All of these options can be seen on the Amazon Pricing Calculator. This handy tool will let the consumer make rough calculations based on the computing power, storage, database, migration, network usage, and anything else they need.

For a simple example, select Compute under Service Pricing. Then select EC2. There is a free tier that allows 750 hours of basic usage a month, used more for familiarizing an individual or team to the interface and available options than anything practical. Or select either the On-Demand or Spot pricing queries.

As of early July 2021, the On-Demand price of an ‘a1.large’ Linux instance on U.S. East with 2 CPUs and 4 GB RAM was $0.0510 an hour. Compare that to $0.0098 for a Spot instance, less than one fifth of the cost.

Similarly, an On Demand ‘t3.large’ Windows instance on U.S. East with 2 CPUs and 8 GB RAM was $0.1108, while the equivalent Spot instance was $0.0526, or less than half the cost.

The lesson here is simple: Only use On-Demand when 24/7 availability with zero gaps is absolutely critical. The same is true for any AWS service. Self healing, automatically deploying instances are far more cost effective on preemptible VMs.

Determining Pricing for AWS – Storage

The next cost to calculate is permanent Cloud storage, assuming that’s required. In some cases, the data resulting from a compute instance is simply transferred to a permanent server or storage array elsewhere, and no Cloud storage is needed.

The main two costs for storage options revolve around how much data is stored, and how frequently it is accessed. As of early July 2021, general purpose, frequently accessed storage on U.S. East was $0.023 per GB. Infrequently accessed data cost $0.0125 per GB. Archived data that took between 1 minute and 12 hours to bring back online cost $0.004 per GB, and deep archives that would only be accessed a couple times a year with a 12 hour lead time cost $0.00099 per GB.

What Type of Storage do you need?

Knowing the kind of storage a project needs, particularly if it is part of a ‘deep backup’ project that would only be needed in cases of disaster recovery, is vital. In some cases, it changes the spending by a factor of one hundred times or more.

The number of times stored data is modified or accessed can also incur system and network usage costs. In July of 2021, for every 1,000 PUT, COPY, POST, or LIST requests on standard S3 storage, there was a $0.005 charge. For every 1,000 GET, SELECT, and other requests, it was a $0.0004 charge. Infrequent usage is cheap, but can add up if the storage system is constantly hammered with requests. Additionally, transfers into the storage array are free, but after the first complimentary GB of outbound transfer, $0.09 per GB is charged.

This cost evaluation process should be repeated for any other required services on the main list. Each time the project identifies an AWS service that it needs, it can be added to a running estimate. AWS provides an overall project calculator that tracks costs as they’re identified and input. This is the most foolproof way to get an accurate monthly estimate of AWS Cloud costs.

Check out our Complete Guide to AWS Storage Services here.

Tips and Tricks

- Start small, scale up: One way organizations waste money is by overprovisioning because these services cost ‘pennies’ an hour. But pricing for AWS can be deceptive, as demonstrated above. When looking at the other fees, a savvy AWS user realizes that pennies quickly add up to big bucks. Leave a little room for edge cases, but only provision what a project will realistically use. It’s easy enough to re-launch on a more robust VM if performance becomes an issue.

- Snapshot and shut down instances after use: A habit that all VM users need to get into is making a snapshot of their experimental servers when the work day is done, and then shutting down. Leaving idle instances running all night when they have no 24/7 usage is simply a waste, and can double or triple running costs… or more if they perform some sort of automation that accesses Cloud storage frequently.

- Containerizing projects costs slightly more up front, but saves in the long term: There’s a reason that the container craze has taken off in recent years. Knowing exactly the ‘size’ of a server that a container runs well on (since the management system will expand to consume all assigned resources) is only half of the benefit. The other half is the ability to leverage self-healing to use far cheaper preemptible VMs. If given the option to containerize or not, as far as Cloud costs are concerned, containerize.

- Make use of AWS Budgets: AWS has its own budgeting and cost warning system called AWS Budgets. Alerts can be issued when certain thresholds are reached, or when estimates get exceeded. Budget notifications are free to set up and free to use. Additional reporting and automation is available at a low per-instance cost.

- Read the AWS Cloud Financial Management Guide: Whoever is paying the bills for AWS needs to read this guide. It’s dry stuff, but contains vital information about how to best track Cloud based IT spends. This includes guides for transitioning existing projects to AWS, and building new projects with cloud efficiency in mind.

- Watch the AWS Optimise Compute video: While not exactly a barn burner, this video is critical to building hybrid Cloud solutions on AWS that fully leverage the right combination of On Demand, Spot, and other offerings to minimize costs.

- Understand Regions and Zones: The physical location of a Cloud server might not matter that much to the end user, but it should matter a great deal to the organization provisioning it. Selecting the correct region can reduce costs dramatically. Selecting the wrong region can increase ping times between the office and the VM by quite a bit. The right balance of cost and physical (and virtual) proximity must be struck. And there’s also the consideration of spreading persistent servers across a couple of different regions or zones as a disaster recovery hedge.

- Make use of AWS Trusted Advisor services: Trusted Advisor is a mixture of human assistance and automation tools that can help to secure, optimize, and minimize costs on medium to large AWS deployments. The little tricks and tweaks that they have available, and their inner knowledge of both the Compute and the billing system, are invaluable.

In Conclusion

By making all of a project’s Cloud cost estimates and entering them into the AWS project calculator, nasty surprises can be avoided. Pricing for AWS can be tricky. But the simple guidelines above, in addition to the tips and tricks that point towards valuable online resources, should set an organization up for success in their venture into the Amazon cloud.

One important thing to remember: Some projects are simply done better with colocation, or by using a hybrid strategy. This isn’t a failure of project design, it is simply the reality of some requirements sets. In cases such as these, there’s no shame in simply using what works. Just be vigilant to changing government and organizational standards, and to advances in Cloud technology and changes in Cloud pricing. In the future, a reevaluation of the same project might yield different results.

You’re not alone!

Final note: There are professional services out there that can help with the planning, budgeting, and implementation of AWS. The Logicata Team are well positioned to help – check out our AWS Migration Services for more info. Sometimes it’s a good idea to let the professionals do the detail work, as they know all of the tricks for achieving maximum performance while staying within a fixed budget.